Deep learning improves image reconstruction in optical coherence tomography using less data

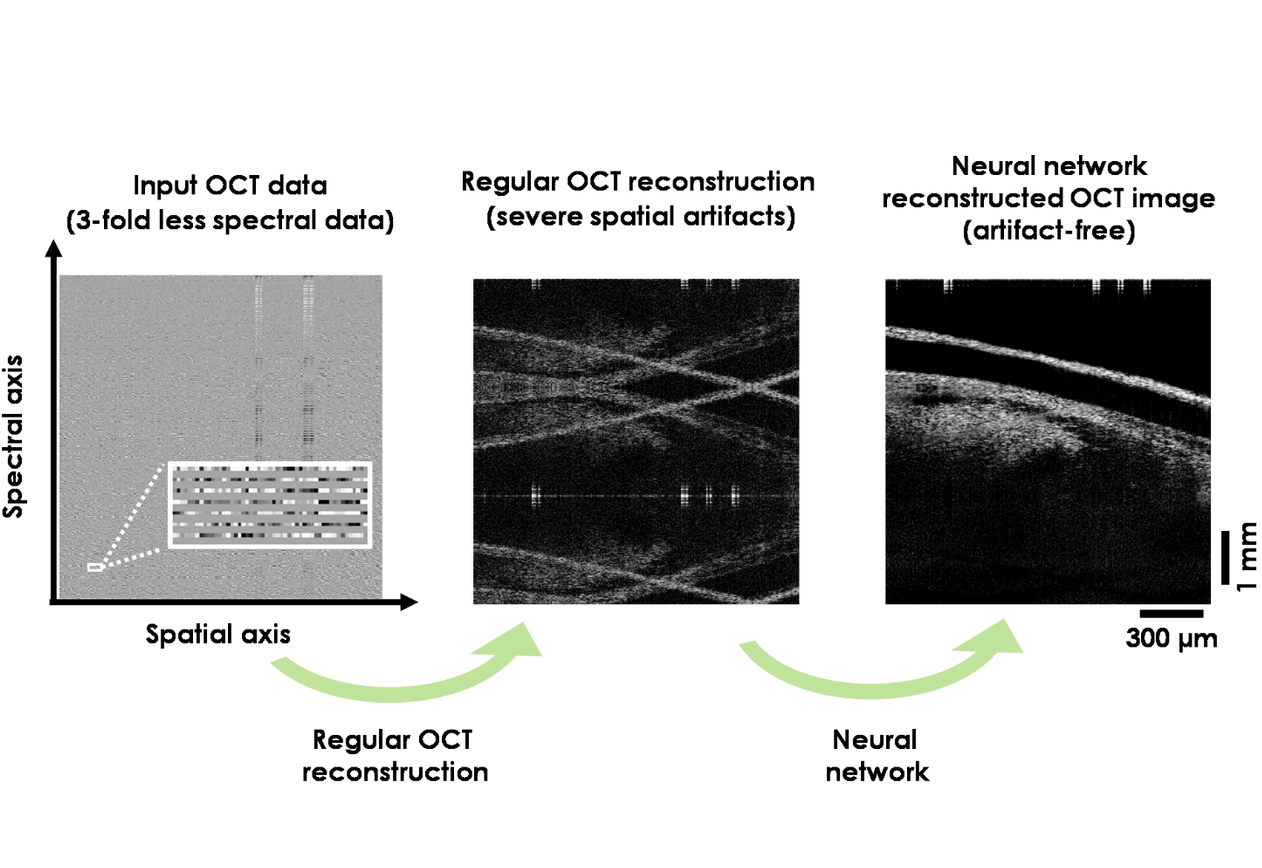

Figure Caption: Deep learning improves image reconstruction in optical coherence tomography using significantly less spectral data.

Image credit: Ozcan Lab @UCLA.

Optical coherence tomography (OCT) is a non-invasive imaging method that can provide 3D information of biological samples. The first generation of OCT systems were based on time-domain imaging, using a mechanical scanning set-up. However, the relatively slow data acquisition speed of these earlier time-domain OCT systems partially limited their use for imaging live specimen. The introduction of the spectral-domain OCT techniques with higher sensitivity has contributed to a dramatic increase in imaging speed and quality. OCT is now widely used in diagnostic medicine, for example in ophthalmology, to noninvasively obtain detailed 3D images of the retina and underlying tissue structure.

In a new paper published in Light: Science & Applications, a team of UCLA and University of Houston (UH) scientists have developed a deep learning-based OCT image reconstruction method that can successfully generate 3D images of tissue specimen using significantly less spectral data than normally required. Using standard image reconstruction methods employed in OCT, undersampled spectral data, where some of the spectral measurements are omitted, would result in severe spatial artifacts in the reconstructed images, obscuring 3D information and structural details of the sample to be visualized. In their new approach, UCLA and UH researchers trained a neural network using deep learning to rapidly reconstruct 3D images of tissue samples with much less spectral data than normally acquired in a typical OCT system, successfully removing the spatial artifacts observed in standard image reconstruction methods.

The efficacy and robustness of this new method was demonstrated by imaging various human and mouse tissue samples using 3-fold less spectral data captured by a state-of-the-art swept-source OCT system. Running on graphics processing units (GPUs), the neural network successfully eliminated severe spatial artifacts due to undersampling and omission of most spectral data points in less than one-thousandth of a second for an OCT image that is composed of 512 depth scans (A-lines).

“These results highlight the transformative potential of this neural network-based OCT image reconstruction framework, which can be easily integrated with various spectral domain OCT systems, to improve their 3D imaging speed without sacrificing resolution or signal-to-noise of the reconstructed images,” said Dr. Aydogan Ozcan, the Chancellor’s Professor of Electrical and Computer Engineering at UCLA and an associate director of the California NanoSystems Institute, who is the senior corresponding author of the work.

This research was led by Dr. Ozcan, in collaboration with Dr. Kirill Larin, a Professor of Biomedical Engineering at University of Houston. The other authors of this work are Yijie Zhang, Tairan Liu, Manmohan Singh, Ege Çetintaş, and Yair Rivenson. Dr. Ozcan also has UCLA faculty appointments in bioengineering and surgery, and is an HHMI Professor.

Link to the paper: https://www.nature.com/articles/s41377-021-00594-7