Diffractive optical networks use object shifts for performance boost

Optical computing has been gaining wide interest for machine learning applications because of the massive parallelism and bandwidth of optics. Diffractive networks provide one such computing paradigm based on the transformation of the input light as it diffracts through a set of spatially-engineered surfaces, performing computation at the speed of light propagation without requiring any external power apart from the input light beam. Among numerous other applications, diffractive networks have been demonstrated to perform all-optical classification of input objects.

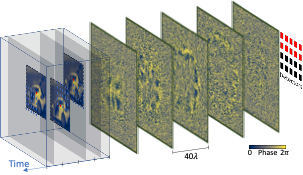

Researchers at the University of California, Los Angeles (UCLA), led by Professor Aydogan Ozcan, have introduced a ‘time-lapse’ scheme to significantly improve the image classification accuracy of diffractive optical networks on complex input objects. In this scheme, the object and/or the diffractive network are moved relative to each other during the exposure of the output detectors. Such a ‘time-lapse’ scheme has previously been used to achieve super-resolution imaging, for example, in security cameras, by capturing multiple images of a scene with lateral movements of the camera. Drawing inspiration from the success of time-lapse super-resolution imaging, UCLA researchers have utilized ‘time-lapse diffractive networks’ to achieve >62% blind testing accuracy on the all-optical classification of CIFAR-10 images, a publicly available dataset containing images of airplanes, cars, cats, etc. Their results achieved a significant improvement over the time-static diffractive optical networks.

The same research group previously demonstrated ensemble learning of diffractive networks, where several diffractive networks operated in unison to improve image classification accuracy. However, with the incorporation of the ‘time-lapse’ scheme, it is possible to outdo an ensemble of >15 networks with a single standalone diffractive network, significantly reducing the footprint of the diffractive system while eliminating the complexities of physical alignment and synchronization of several individual networks. The researchers also explored the incorporation of ensemble learning into time-lapse image classification, which revealed >65% blind testing accuracy in classifying CIFAR-10 images.

For the physical implementation of the presented time-lapse classification scheme, the simplest method would exploit the natural jitter of the objects or the diffractive camera during imaging and allow the benefit of time-lapse to be reaped with no additional cost apart from a slight increase in the inference time due to detector signal integration during the jitter.

This research on time-lapse image classification is a demonstration of utilizing the temporal degrees of freedom of optical fields for optical computing and presents a large step forward toward all-optical spatiotemporal information processing with compact, low-cost and passive materials.

Reference: Md Sadman Sakib Rahman and Aydogan Ozcan, Time-lapse image classification using a diffractive neural network, Advanced Intelligent Systems (2023). DOI: 10.1002/aisy.202200387