Image denoising using a diffractive material

While image denoising algorithms have undergone extensive research and advancements in the past decades, classical denoising techniques often necessitate numerous iterations for their inference, making them less suitable for real-time applications. The advent of deep neural networks (DNNs) has ushered in a paradigm shift, enabling the development of non-iterative, feed-forward digital image denoising approaches. These DNN-based methods exhibit remarkable efficacy, achieving real-time performance while maintaining high denoising accuracy. However, these deep learning-based digital denoisers incur a trade-off, demanding high-cost, resource- and power-intensive graphics processing units (GPUs) for operation.

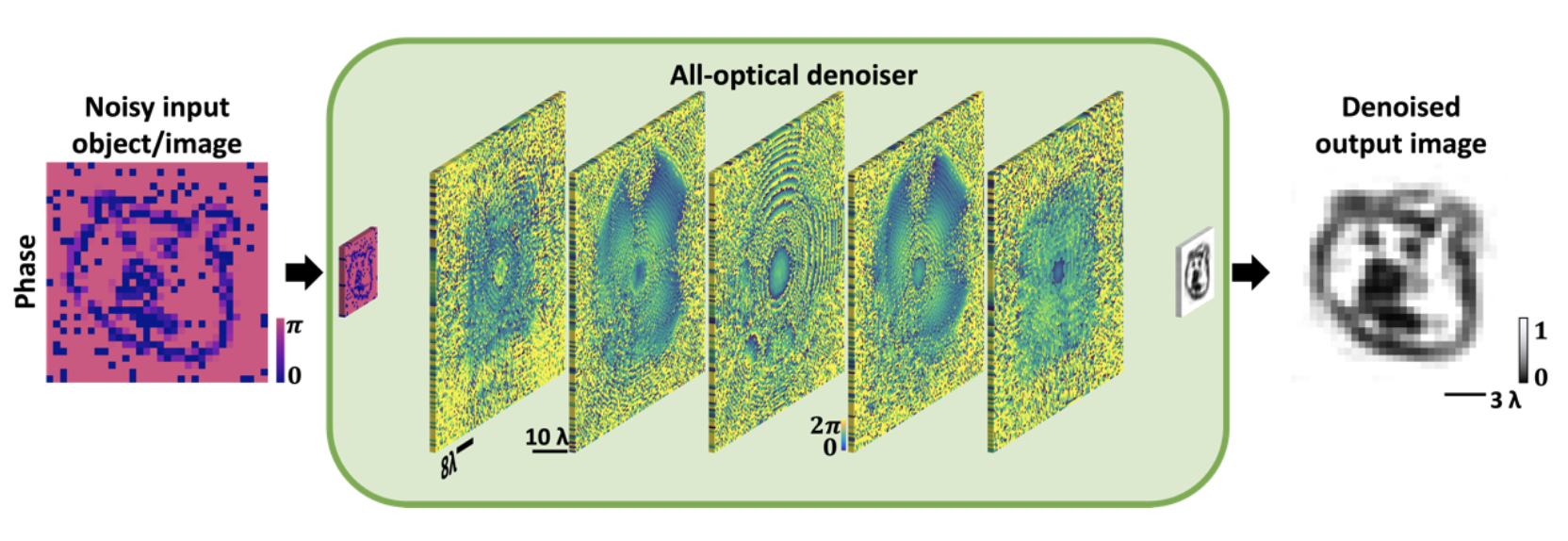

In a new article published in Light: Science & Applications, a team of researchers, led by Professors Aydogan Ozcan and Mona Jarrahi from University of California, Los Angeles (UCLA), USA, and Professor Kaan Aksit from University College London (UCL), UK developed a physical image denoiser comprising spatially engineered diffractive layers to process noisy input images at the speed of light and synthesize denoised images at its output field-of-view without any digital computing.

Following a one-time training on a computer, the resulting visual processor with its passive diffractive layers is fabricated, forming a physical image denoiser that scatters out the optical modes associated with undesired noise or spatial artifacts of the input images. Through its optimized design, this diffractive visual processor preserves the optical modes representing the desired spatial features of the input images with minimal distortions. As a result, it instantly synthesizes denoised images within its output field-of-view without the need to digitize, store or transmit an image for a digital processor to act on it. The efficacy of this all-optical image denoising approach was validated by suppressing salt and pepper noise from both intensity- and phase-encoded input images. Furthermore, this physical image denoising framework was experimentally demonstrated using terahertz radiation and a 3D-fabricated diffractive denoiser.

This all-optical image denoising framework offers several important advantages, such as low power consumption, ultra-high speed, and compact size. The research team envisions that the success of these all-optical image denoisers can catalyze the development of all-optical visual processors tailored to address various inverse problems in imaging and sensing.

The authors of this work are Cagatay Isil, Tianyi Gan, F. Onuralp Ardic, Koray Mentesoglu, Jagrit Digani, Huseyin Karaca, Hanlong Chen, Jingxi Li, Deniz Mengu, Mona Jarrahi, and Aydogan Ozcan of UCLA Samueli School of Engineering as well as Kaan Aksit of UCL, Department of Computer Science. The researchers acknowledge the funding of the US Department of Energy (DOE).

See the article:

Cagatay Isil, Tianyi Gan, F. Onuralp Ardic, Koray Mentesoglu, Jagrit Digani, Huseyin Karaca, Hanlong Chen, Jingxi Li, Deniz Mengu, Mona Jarrahi, Kaan Aksit, and Aydogan Ozcan “All-optical image denoising using a diffractive visual processor”, Light: Science & Applications (2024)

https://www.nature.com/articles/s41377-024-01385-6