UCLA HCI at UIST 2022

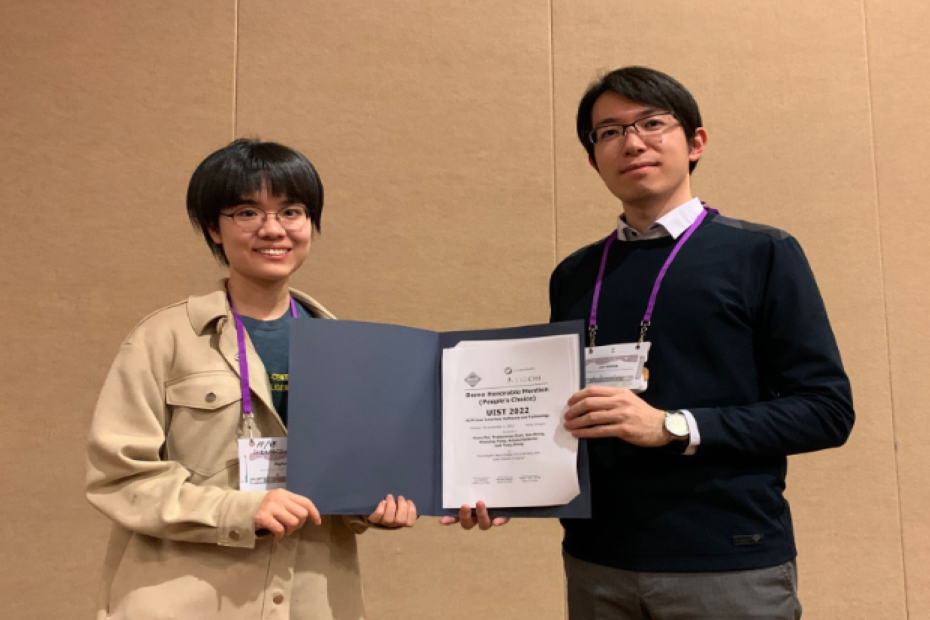

UCLA ECE published three papers and received two awards this week at UIST, a top Human-Computer Interaction conference. These papers are led by students from Prof. Anthony Chen, Achuta Kadambi, and Yang Zhang’s labs. One paper received best paper award (top 3 of 367 submissions), and another paper’s complementary demo received people’s choice best demo honorable mention award (top 2 of 73 demos).

The ACM Symposium on User Interface Software and Technology (UIST) is the premier forum for innovations in human-computer interfaces (https://dl.acm.org/conference/uist). Sponsored by ACM’s special interest groups on computer-human interaction (SIGCHI) and computer graphics (SIGGRAPH), UIST brings together researchers and practitioners from diverse areas. The UIST review process is highly competitive and has an acceptance rate of 25.9% this year with 367 submissions resulting in 95 accepted papers. The three papers from UCLA ECE address challenges in today’s interactive systems on fronts including accessibility, AI, and smart environment sensing:

CrossA11y (https://doi.org/10.1145/3526113.3545703), led by student Xingyu Liu, addresses accessibility of online videos by facilitating authors to make accessibility edits more efficiently. Take Youtube for example. Authors often make their videos visually accessible by adding audio descriptions (AD), and auditorily accessible by adding closed captions (CC). However, creating AD and CC is challenging and tedious, especially for non-professional describers and captioners, due to the difficulty of identifying accessibility problems in videos. A video author will have to watch the video through and manually check for inaccessible information frame-by-frame, for both visual and auditory modalities. CrossA11y could helps authors efficiently detect and address visual and auditory accessibility issues in videos by checking for modality asymmetries in visual and audio segments.

GANzilla (https://doi.org/10.1145/3526113.3545638), led by student Noyan Evirgen, empowers a user to use generative adversarial network (GAN) more efficiently through iterative discoveries. GAN is widely adopted in numerous application areas, such as data preprocessing, image editing, and creativity support. However, GAN’s ‘black box’ nature prevents non-expert users from controlling what data a model generates, spawning a plethora of prior work that focused on algorithm-driven approaches to extract editing directions to control GAN. GANzilla, resulted from this work, is a user-driven tool that could guide users in iteratively discovering directions to meet their editing goals with a scatter/gather technique.

ForceSight (https://dl.acm.org/doi/10.1145/3526113.3545622), led by student Siyou Pei, uses laser speckle imaging to remotely sense force on everyday surfaces. Force sensing has been a key enabling technology for a wide range of interfaces such as digitally enhanced body and world surfaces for touch interactions. Additionally, force often contains rich contextual information about user activities and can be used to enhance machine perception for improved user and environment awareness. To sense force, conventional approaches rely on contact sensors made of pressure-sensitive materials such as piezo films/discs or force-sensitive resistors. The key observation in this research is that object surfaces deform in the presence of force. This deformation, though very minute, manifests as observable and discernible laser speckle shifts, which ForceSight leverages to sense the applied force. This non-contact force-sensing capability opens up new opportunities for rich interactions and can be used to power user-/environment-aware interfaces.